Difference between revisions of "Historical:SoC 2008 Project OpenGL Preview"

James Legg (talk | contribs) (Update since GSOC is complete. Links to soon-to-be new pages added.) |

Erik Krause (talk | contribs) m (Erik Krause moved page SoC 2008 Project OpenGL Preview to Historical:SoC 2008 Project OpenGL Preview) |

(No difference)

| |

Latest revision as of 18:36, 5 June 2020

Hugin's OpenGL Preview is a fast but approximate previewer, which is more interactive than the previous one. It was James Legg's Google Summer of Code 2008 project, mentored by Pablo d'Angelo.

Image Transformations

The new previewer will have to approximate the remapping of the images to fit on low resolution meshes. There are two methods it uses for this:

Remapping Vertices

Taking a point in the input image, we can find where the point is transformed to on the final panorama. Therefore, we can take a uniform grid over an input image, and create a mesh that shows remaps this grid into the output projection.

Disadvantages:

- This method suffers when the input image crosses over the +/-180° boundary in projections such as Equirectangular Projection, as there will be faces with vertices on either side of the seam. In this case the previewer replaces the face with two faces, one for each side.

- The poles of an equirectangular image will also cause similar problems. At a pole, the image should cover the whole width of the panorama, but this is only one point in the input image. Vertices in the mesh near the pole are define a face that does not cover the whole row. Also the outer rings of disk like projections are poorly done.

- The detail in the faces might not match up well with the area of the panorama. The faces are all of different sizes and some mappings may put the details in parts that can't be seen as easily as the lower resolution parts.

Advantages:

- The mesh resembles the output projection well, and covers up roughly the same area as the correct projection. The edges of the mesh lies along the edges of the image, so we don't need to worry about what happens outside of it (except with circular cropping). Most warped images can be displayed reasonably well using this method.

Implementation: This is implemented in the VertexCoordRemapper object. It is used for most images.

Remapping Texture Coordinates

Alternatively, given a point on the final panorama, we can calculate what part of an input image belongs there. Therefore, we can take a uniform grid over the panorama, and map the input image across it.

Disadvantages:

- This mapping breaks down at the borders of the input image. The borders of the input image may cross the output mesh at any position. We clip the faces to the input image to avoid this problem.

- This is inefficient for small images, as they do not cover much of the panorama. Many faces are considered outside of the input image and dropped during clipping.

- The quality of the shape of curved images is a little lower than with the vertex coordinate remapping.

Advantages:

- There are no problems at the poles and edges of the panorama.

- The output is of uniform quality throughout, as the faces always have the same density.

Implementation: This is implemented in the TexCoordRemapper object. It is used for images that cross the poles in many cylinderical type projections, or the outer ring of disk like projections (e.g. fisheye) also uses this. It is also used in Alber's equal area conic projection since the +/- 180° boundary correction is difficult to implement, and the poles need it anyway.

Distortions Comparison

To check that these transformation methods do not distort the image too much, I devised a test. Using Nona to produce a coordinate transformation map from input image coordinates to output image coordinates, and similarly creating a projection that goes the other way, I tried these transformations in Blender.

I projected a cylindrical panorama, 360° wide, and with a pixel resolution 4 times as wide as it is tall, into a fisheye image pointing at the lower pole of the input image, 270° wide, and square.

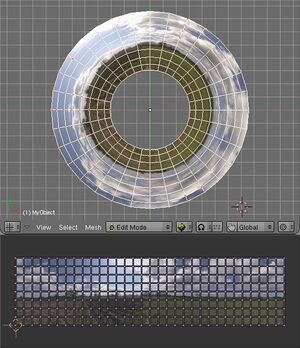

| Vertex mapping Approximate Transformation

This mesh has 32 columns of faces, and 8 rows, across the input image. Since the image is 4 times as wide as it is tall, this means there are 256 faces which cover an equal amount of the input image. |

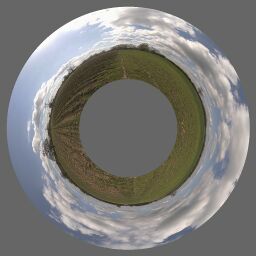

Transformation with Nona

This is the remapping that the other images are approximating. |

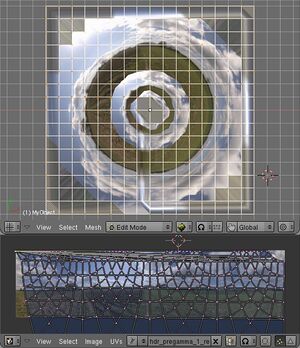

Texture Coordinate mapping Approximate Transformation

This mesh has 16 rows of faces and 16 columns, spread equally over the output panorama, so there are 256 faces which cover an equal amount of the output. Note that for these images I have not attempted to correct the texture coordinates around the boundary of the input image. |

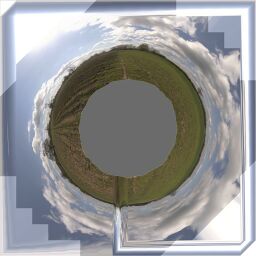

Another test performed was mapping a rectilinear image to the zenith of an equirectangular image.

Modules

See main article: Hugin's OpenGL preview system overview

Building and Usage

The OpenGL preview is not in the main Hugin branch yet. If you wish to experiment, and don't mind compiling it yourself, you can check out the latest version using svn with: <source lang="bash"> svn co https://hugin.svn.sourceforge.net/svnroot/hugin/hugin/branches/gsoc2008_opengl_preview hugin </source> For help compiling, you might want to read Hugin Compiling OSX or Hugin Compiling Windows depending on you operating system. The preview adds extra dependencies, firstly you'll need glew, and also the wxGLCanvas object in the wxWidgets library (which is not compiled by default on Windows).

See Hugin Fast Preview window for usage instructions.